Man meets Machine beats Man

Man meets Machine beats Man

After my well-known and uncomplimentary views on legal awards ceremonies (you know, the largely arbitrary and ultimately worthless ones the legal directories run) I never thought I’d be confessing that I actually attended one but last week that was the case and what’s more, I was asked to attend as a technical judge and announce the results.

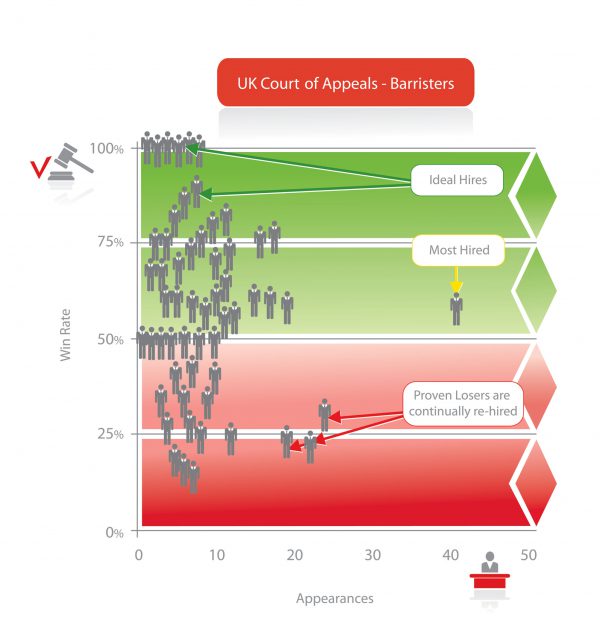

However, as you might have guessed, this was no ordinary awards ceremony. It was run by the smart young folk at CaseCrunch and featured a real competition, one with numbers, facts and statistics and billed by the hosts as “the first event in history to directly pit machines against lawyers’. I’m sure that headline sent a shiver down the spine of many a wood-paneled law firms.

Over a period of one week, participants including 100+ lawyers were asked to access facts of real PPI mis-selling complaints received by the Financial Ombudsman Service. They were then asked to predict whether the complaint was upheld or rejected. 775 predictions were submitted.

The Case Crunch AI system was asked to review the exact same facts and make its own predictions. The side with the higher accuracy wins the competition.

Sadly, the lawyers fared badly against the machine as the competition produced a groundbreaking result. Lawyers managed only 62.3% accuracy, which wasn’t even within driving distance of the machine’s 86.6% score.

Ludwig Bull, scientific director at CaseCrunch, was quoted as saying “Evaluating these results is tricky. These results do not mean that machines are generally better at predicting outcomes than human lawyers. These results show that if the question is defined precisely, machines are able to compete with and sometimes outperform human lawyers. The use case for these systems is clear. Legal decision prediction systems like ours can solve legal bottlenecks within organizations permanently and reliably. The main reason for the machine’s winning margin seemed to be that the network has a better grasp of the importance of non-legal factors”

Alongside my enjoyment of the event and a real sense that I was witnessing groundbreaking stuff, the next stage will be to try and attach a value to the differences. If the real number for a Human is 62.3% at £300p/h and X hours’ compared to AI with 86.6% at £17ph and X hours, then you’re left with a starkly contrasting bottom line.

Last year, AI was used to predict the outcome of cases in the European Court of Human Rights in 79% of cases. Those who think this sort of stuff is a gimmick might like to think again as the accelerating pace of change starts to really impact on this dusty old profession. As for my view on awards ceremonies, they haven’t changed and perhaps this comment, taken from a LinkedIn comments thread about the event, best sums it up …

“A legal awards ceremony based on verifiable empirical evidence rather than peer recommendation or the number of pompous social media posts? That’s plain heresy. Pass me my bell, book and candle. – Christopher Deadman

[Article written by Ian Dodd, Premonition UK Director]